写了一个简单的网络爬虫,用于获取智联招聘上一线及新一线城市所有与BIM相关的工作信息以便做一些数据分析。

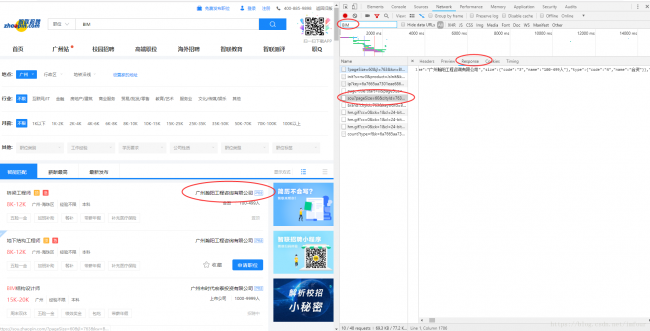

首先通过chrome在智联招聘上搜索BIM的职位,跳出页面后ctrl+u查看网页源代码,然而并没有找到当前页面的职位信息。然后快捷键F12打开开发者工具窗口,刷新页面,通过关键字过滤文件,找到一个包含职位的数据包。

查看这个文件的请求URL,分析其构造发现数据包的请求URL由

‘https://fe-api.zhaopin.com/c/i/sou?’+请求参数组成,那么根据格式构造了一个新的url(

‘https://fe-api.zhaopin.com/c/i/sou?pageSize=60&cityId=763&workExperience=-1&education=-1&companyType=-1&employmentType=-1&jobWelfareTag=-1&kw=造价员&kt=3’)复制到浏览器进行访问测试,成功获得相应数据

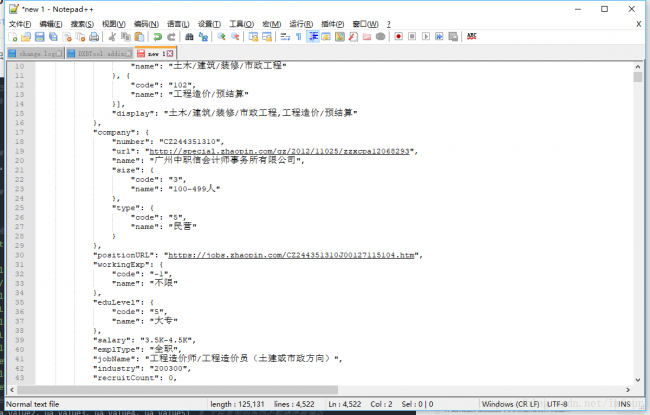

取得的为json格式数据,先将数据格式化,分析构造,确定代码中数据的解析方法。

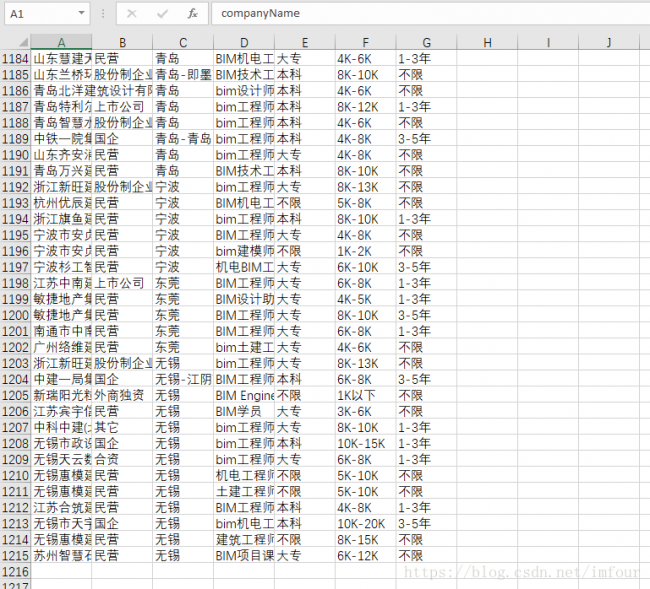

请求URL及数据结构都清楚后,剩下的就是在代码中实现URL的构造、数据解析及导出。最后获得1215个数据,还需进一步对数据进行整理,以便进行数据分析。

代码:

# -*- coding: utf-8 -*-

import csv

import re

import requests

import time

from urllib.parse import urlencode

from requests.exceptions import RequestException

csvHeaders = ['companyName', 'companyType', 'region', 'jobName', 'education', 'salary', 'workExperience']

def GetJobsData(cityId, keyWord, startIndex):

'''

请求数据

'''

paras = {

# 开始位置

'start': startIndex,

# 单页数量

'pageSize': '60',

# 城市

'cityId': cityId,

# 工作经验

'workExerience': '-1',

# 教育程度

'education': '-1',

# 公司类型

'companyType': '-1',

# 工作类型

'employmentType': '-1',

# 工作福利标签

'jobWelfareTag': '-1',

# 关键词

'kw': keyWord,

# 按职位查找

'kt': '3'

}

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.140 Safari/537.36 Edge/17.17134',

}

# https://fe-api.zhaopin.com/c/i/sou?start=60&pageSize=60&cityId=763&workExperience=-1&education=-1&companyType=-1&employmentType=-1&jobWelfareTag=-1&kw=BIM&kt=3

url = 'https://fe-api.zhaopin.com/c/i/sou?' + urlencode(paras)

# print(url)

response = requests.get(url, headers = headers)

if response.status_code == 200:

josnData = response.json()

return josnData

return None

def ParseData(jsonData, filename):

'''

解析并导出数据

'''

jobInfo = {}

for i in jsonData['data']['results']:

jobInfo['companyName'] = i['company']['name']

jobInfo['companyType'] = i['company']['type']['name']

jobInfo['region'] = i['city']['display']

jobInfo['jobName'] = i['jobName']

jobInfo['education'] = i['eduLevel']['name']

jobInfo['salary'] = i['salary']

jobInfo['workExperience'] = i['workingExp']['name']

# 清理不带关键字的数据

if jobInfo['jobName'].find('BIM') != -1 or jobInfo['jobName'].find('bim') != -1:

WriteCsv(filename,jobInfo)

# 显示数据

print(jobInfo)

def CreateCsv(path):

'''

创建CSV文件

'''

with open(path, 'a', encoding = 'gb18030', newline = '') as f:

csvFile = csv.DictWriter(f, csvHeaders)

csvFile.writeheader()

def WriteCsv(path, row):

'''

写入数据

'''

with open(path, 'a', encoding = 'gb18030', newline = '') as f:

csvFile = csv.DictWriter(f, csvHeaders)

csvFile.writerow(row)

def Main(citiesId, keyWord, lastPage):

'''

主函数

'''

# 生成csv文件

filename = 'ZL_' + keyWord + '_' + time.strftime('%Y%m%d%H%M%S',time.localtime()) + '.csv'

CreateCsv(filename)

for cityId in citiesId:

startPage = 1

while startPage <= lastPage:

startIndex = 60 * (startPage-1) + 1

startPage = startPage + 1

try:

# 请求数据

jsonData = GetJobsData(cityId, keyWord, startIndex)

# 解析数据

ParseData(jsonData, filename)

except RequestException as e:

break

if __name__ == '__main__':

cities = ['530', '538', '765', '763', '801', '653', '736', '639', '854', '531', '635', '719', '749', '599' , '703', '654', '779', '636']

Main(cities, 'BIM', 5)

print('获取完毕')

微信公众号:xuebim

关注建筑行业BIM发展、研究建筑新技术,汇集建筑前沿信息!

← 微信扫一扫,关注我们+

BIM建筑网

BIM建筑网

评论前必须登录!

注册